const csvFilePath='<path to csv file>'const csv=// Promise usage// Async / await usageconst jsonArray=await ;

Thursday, November 26, 2020

Reading and Writing CSV Files with Node.js

Friday, November 20, 2020

Production Best Practices: Security

Overview

The term “production” refers to the stage in the software lifecycle when an application or API is generally available to its end-users or consumers. In contrast, in the “development” stage, you’re still actively writing and testing code, and the application is not open to external access. The corresponding system environments are known as production and development environments, respectively.

Development and production environments are usually set up differently and have vastly different requirements. What’s fine in development may not be acceptable in production. For example, in a development environment you may want verbose logging of errors for debugging, while the same behavior can become a security concern in a production environment. And in development, you don’t need to worry about scalability, reliability, and performance, while those concerns become critical in production.

Note: If you believe you have discovered a security vulnerability in Express, please see Security Policies and Procedures.

Security best practices for Express applications in production include:

- Don’t use deprecated or vulnerable versions of Express

- Use TLS

- Use Helmet

- Use cookies securely

- Prevent brute-force attacks against authorization

- Ensure your dependencies are secure

- Avoid other known vulnerabilities

- Additional considerations

Don’t use deprecated or vulnerable versions of Express

Express 2.x and 3.x are no longer maintained. Security and performance issues in these versions won’t be fixed. Do not use them! If you haven’t moved to version 4, follow the migration guide.

Also ensure you are not using any of the vulnerable Express versions listed on the Security updates page. If you are, update to one of the stable releases, preferably the latest.

Use TLS

If your app deals with or transmits sensitive data, use Transport Layer Security (TLS) to secure the connection and the data. This technology encrypts data before it is sent from the client to the server, thus preventing some common (and easy) hacks. Although Ajax and POST requests might not be visibly obvious and seem “hidden” in browsers, their network traffic is vulnerable to packet sniffing and man-in-the-middle attacks.

You may be familiar with Secure Socket Layer (SSL) encryption. TLS is simply the next progression of SSL. In other words, if you were using SSL before, consider upgrading to TLS. In general, we recommend Nginx to handle TLS. For a good reference to configure TLS on Nginx (and other servers), see Recommended Server Configurations (Mozilla Wiki).

Also, a handy tool to get a free TLS certificate is Let’s Encrypt, a free, automated, and open certificate authority (CA) provided by the Internet Security Research Group (ISRG).

Use Helmet

Helmet can help protect your app from some well-known web vulnerabilities by setting HTTP headers appropriately.

Helmet is actually just a collection of smaller middleware functions that set security-related HTTP response headers:

- csp sets the

Content-Security-Policyheader to help prevent cross-site scripting attacks and other cross-site injections. - hidePoweredBy removes the

X-Powered-Byheader. - hsts sets

Strict-Transport-Securityheader that enforces secure (HTTP over SSL/TLS) connections to the server. - ieNoOpen sets

X-Download-Optionsfor IE8+. - noCache sets

Cache-Controland Pragma headers to disable client-side caching. - noSniff sets

X-Content-Type-Optionsto prevent browsers from MIME-sniffing a response away from the declared content-type. - frameguard sets the

X-Frame-Optionsheader to provide clickjacking protection. - xssFilter sets

X-XSS-Protectionto enable the Cross-site scripting (XSS) filter in most recent web browsers.

Install Helmet like any other module:

$ npm install --save helmet

Then to use it in your code:

// ...

var helmet = require('helmet')

app.use(helmet())

// ...

At a minimum, disable X-Powered-By header

If you don’t want to use Helmet, then at least disable the X-Powered-By header. Attackers can use this header (which is enabled by default) to detect apps running Express and then launch specifically-targeted attacks.

So, best practice is to turn off the header with the app.disable() method:

app.disable('x-powered-by')

If you use helmet.js, it takes care of this for you.

Note: Disabling the X-Powered-By header does not prevent a sophisticated attacker from determining that an app is running Express. It may discourage a casual exploit, but there are other ways to determine an app is running Express.

Use cookies securely

To ensure cookies don’t open your app to exploits, don’t use the default session cookie name and set cookie security options appropriately.

There are two main middleware cookie session modules:

- express-session that replaces

express.sessionmiddleware built-in to Express 3.x. - cookie-session that replaces

express.cookieSessionmiddleware built-in to Express 3.x.

The main difference between these two modules is how they save cookie session data. The express-session middleware stores session data on the server; it only saves the session ID in the cookie itself, not session data. By default, it uses in-memory storage and is not designed for a production environment. In production, you’ll need to set up a scalable session-store; see the list of compatible session stores.

In contrast, cookie-session middleware implements cookie-backed storage: it serializes the entire session to the cookie, rather than just a session key. Only use it when session data is relatively small and easily encoded as primitive values (rather than objects). Although browsers are supposed to support at least 4096 bytes per cookie, to ensure you don’t exceed the limit, don’t exceed a size of 4093 bytes per domain. Also, be aware that the cookie data will be visible to the client, so if there is any reason to keep it secure or obscure, then express-session may be a better choice.

Don’t use the default session cookie name

Using the default session cookie name can open your app to attacks. The security issue posed is similar to X-Powered-By: a potential attacker can use it to fingerprint the server and target attacks accordingly.

To avoid this problem, use generic cookie names; for example using express-session middleware:

var session = require('express-session')

app.set('trust proxy', 1) // trust first proxy

app.use(session({

secret: 's3Cur3',

name: 'sessionId'

}))

Set cookie security options

Set the following cookie options to enhance security:

secure- Ensures the browser only sends the cookie over HTTPS.httpOnly- Ensures the cookie is sent only over HTTP(S), not client JavaScript, helping to protect against cross-site scripting attacks.domain- indicates the domain of the cookie; use it to compare against the domain of the server in which the URL is being requested. If they match, then check the path attribute next.path- indicates the path of the cookie; use it to compare against the request path. If this and domain match, then send the cookie in the request.expires- use to set expiration date for persistent cookies.

Here is an example using cookie-session middleware:

var session = require('cookie-session')

var express = require('express')

var app = express()

var expiryDate = new Date(Date.now() + 60 * 60 * 1000) // 1 hour

app.use(session({

name: 'session',

keys: ['key1', 'key2'],

cookie: {

secure: true,

httpOnly: true,

domain: 'example.com',

path: 'foo/bar',

expires: expiryDate

}

}))

Prevent brute-force attacks against authorization

Make sure login endpoints are protected to make private data more secure.

A simple and powerful technique is to block authorization attempts using two metrics:

- The first is number of consecutive failed attempts by the same user name and IP address.

- The second is number of failed attempts from an IP address over some long period of time. For example, block an IP address if it makes 100 failed attempts in one day.

rate-limiter-flexible package provides tools to make this technique easy and fast. You can find an example of brute-force protection in the documentation

Ensure your dependencies are secure

Using npm to manage your application’s dependencies is powerful and convenient. But the packages that you use may contain critical security vulnerabilities that could also affect your application. The security of your app is only as strong as the “weakest link” in your dependencies.

Since npm@6, npm automatically reviews every install request. Also you can use ‘npm audit’ to analyze your dependency tree.

$ npm audit

If you want to stay more secure, consider Snyk.

Snyk offers both a command-line tool and a Github integration that checks your application against Snyk’s open source vulnerability database for any known vulnerabilities in your dependencies. Install the CLI as follows:

$ npm install -g snyk

$ cd your-app

Use this command to test your application for vulnerabilities:

$ snyk test

Use this command to open a wizard that walks you through the process of applying updates or patches to fix the vulnerabilities that were found:

$ snyk wizard

Avoid other known vulnerabilities

Keep an eye out for Node Security Project or Snyk advisories that may affect Express or other modules that your app uses. In general, these databases are excellent resources for knowledge and tools about Node security.

Finally, Express apps - like any other web apps - can be vulnerable to a variety of web-based attacks. Familiarize yourself with known web vulnerabilities and take precautions to avoid them.

Additional considerations

Here are some further recommendations from the excellent Node.js Security Checklist. Refer to that blog post for all the details on these recommendations:

- Use csurf middleware to protect against cross-site request forgery (CSRF).

- Always filter and sanitize user input to protect against cross-site scripting (XSS) and command injection attacks.

- Defend against SQL injection attacks by using parameterized queries or prepared statements.

- Use the open-source sqlmap tool to detect SQL injection vulnerabilities in your app.

- Use the nmap and sslyze tools to test the configuration of your SSL ciphers, keys, and renegotiation as well as the validity of your certificate.

- Use safe-regex to ensure your regular expressions are not susceptible to regular expression denial of service attacks.

Production best practices: performance and reliability

Overview

This article discusses performance and reliability best practices for Express applications deployed to production.

This topic clearly falls into the “devops” world, spanning both traditional development and operations. Accordingly, the information is divided into two parts:

- Things to do in your code (the dev part):

- Things to do in your environment / setup (the ops part):

Things to do in your code

Here are some things you can do in your code to improve your application’s performance:

Use gzip compression

Gzip compressing can greatly decrease the size of the response body and hence increase the speed of a web app. Use the compression middleware for gzip compression in your Express app. For example:

var compression = require('compression')

var express = require('express')

var app = express()

app.use(compression())

For a high-traffic website in production, the best way to put compression in place is to implement it at a reverse proxy level (see Use a reverse proxy). In that case, you do not need to use compression middleware. For details on enabling gzip compression in Nginx, see Module ngx_http_gzip_module in the Nginx documentation.

Don’t use synchronous functions

Synchronous functions and methods tie up the executing process until they return. A single call to a synchronous function might return in a few microseconds or milliseconds, however in high-traffic websites, these calls add up and reduce the performance of the app. Avoid their use in production.

Although Node and many modules provide synchronous and asynchronous versions of their functions, always use the asynchronous version in production. The only time when a synchronous function can be justified is upon initial startup.

If you are using Node.js 4.0+ or io.js 2.1.0+, you can use the --trace-sync-io command-line flag to print a warning and a stack trace whenever your application uses a synchronous API. Of course, you wouldn’t want to use this in production, but rather to ensure that your code is ready for production. See the node command-line options documentation for more information.

Do logging correctly

In general, there are two reasons for logging from your app: For debugging and for logging app activity (essentially, everything else). Using console.log() or console.error() to print log messages to the terminal is common practice in development. But these functions are synchronous when the destination is a terminal or a file, so they are not suitable for production, unless you pipe the output to another program.

For debugging

If you’re logging for purposes of debugging, then instead of using console.log(), use a special debugging module like debug. This module enables you to use the DEBUG environment variable to control what debug messages are sent to console.error(), if any. To keep your app purely asynchronous, you’d still want to pipe console.error() to another program. But then, you’re not really going to debug in production, are you?

For app activity

If you’re logging app activity (for example, tracking traffic or API calls), instead of using console.log(), use a logging library like Winston or Bunyan. For a detailed comparison of these two libraries, see the StrongLoop blog post Comparing Winston and Bunyan Node.js Logging.

Handle exceptions properly

Node apps crash when they encounter an uncaught exception. Not handling exceptions and taking appropriate actions will make your Express app crash and go offline. If you follow the advice in Ensure your app automatically restarts below, then your app will recover from a crash. Fortunately, Express apps typically have a short startup time. Nevertheless, you want to avoid crashing in the first place, and to do that, you need to handle exceptions properly.

To ensure you handle all exceptions, use the following techniques:

Before diving into these topics, you should have a basic understanding of Node/Express error handling: using error-first callbacks, and propagating errors in middleware. Node uses an “error-first callback” convention for returning errors from asynchronous functions, where the first parameter to the callback function is the error object, followed by result data in succeeding parameters. To indicate no error, pass null as the first parameter. The callback function must correspondingly follow the error-first callback convention to meaningfully handle the error. And in Express, the best practice is to use the next() function to propagate errors through the middleware chain.

For more on the fundamentals of error handling, see:

What not to do

One thing you should not do is to listen for the uncaughtException event, emitted when an exception bubbles all the way back to the event loop. Adding an event listener for uncaughtException will change the default behavior of the process that is encountering an exception; the process will continue to run despite the exception. This might sound like a good way of preventing your app from crashing, but continuing to run the app after an uncaught exception is a dangerous practice and is not recommended, because the state of the process becomes unreliable and unpredictable.

Additionally, using uncaughtException is officially recognized as crude. So listening for uncaughtException is just a bad idea. This is why we recommend things like multiple processes and supervisors: crashing and restarting is often the most reliable way to recover from an error.

We also don’t recommend using domains. It generally doesn’t solve the problem and is a deprecated module.

Use try-catch

Try-catch is a JavaScript language construct that you can use to catch exceptions in synchronous code. Use try-catch, for example, to handle JSON parsing errors as shown below.

Use a tool such as JSHint or JSLint to help you find implicit exceptions like reference errors on undefined variables.

Here is an example of using try-catch to handle a potential process-crashing exception. This middleware function accepts a query field parameter named “params” that is a JSON object.

app.get('/search', function (req, res) {

// Simulating async operation

setImmediate(function () {

var jsonStr = req.query.params

try {

var jsonObj = JSON.parse(jsonStr)

res.send('Success')

} catch (e) {

res.status(400).send('Invalid JSON string')

}

})

})

However, try-catch works only for synchronous code. Because the Node platform is primarily asynchronous (particularly in a production environment), try-catch won’t catch a lot of exceptions.

Use promises

Promises will handle any exceptions (both explicit and implicit) in asynchronous code blocks that use then(). Just add .catch(next) to the end of promise chains. For example:

app.get('/', function (req, res, next) {

// do some sync stuff

queryDb()

.then(function (data) {

// handle data

return makeCsv(data)

})

.then(function (csv) {

// handle csv

})

.catch(next)

})

app.use(function (err, req, res, next) {

// handle error

})

Now all errors asynchronous and synchronous get propagated to the error middleware.

However, there are two caveats:

- All your asynchronous code must return promises (except emitters). If a particular library does not return promises, convert the base object by using a helper function like Bluebird.promisifyAll().

- Event emitters (like streams) can still cause uncaught exceptions. So make sure you are handling the error event properly; for example:

const wrap = fn => (...args) => fn(...args).catch(args[2])

app.get('/', wrap(async (req, res, next) => {

const company = await getCompanyById(req.query.id)

const stream = getLogoStreamById(company.id)

stream.on('error', next).pipe(res)

}))

The wrap() function is a wrapper that catches rejected promises and calls next() with the error as the first argument. For details, see Asynchronous Error Handling in Express with Promises, Generators and ES7.

For more information about error-handling by using promises, see Promises in Node.js with Q – An Alternative to Callbacks.

Things to do in your environment / setup

Here are some things you can do in your system environment to improve your app’s performance:

- Set NODE_ENV to “production”

- Ensure your app automatically restarts

- Run your app in a cluster

- Cache request results

- Use a load balancer

- Use a reverse proxy

Set NODE_ENV to “production”

The NODE_ENV environment variable specifies the environment in which an application is running (usually, development or production). One of the simplest things you can do to improve performance is to set NODE_ENV to “production.”

Setting NODE_ENV to “production” makes Express:

- Cache view templates.

- Cache CSS files generated from CSS extensions.

- Generate less verbose error messages.

Tests indicate that just doing this can improve app performance by a factor of three!

If you need to write environment-specific code, you can check the value of NODE_ENV with process.env.NODE_ENV. Be aware that checking the value of any environment variable incurs a performance penalty, and so should be done sparingly.

In development, you typically set environment variables in your interactive shell, for example by using export or your .bash_profile file. But in general you shouldn’t do that on a production server; instead, use your OS’s init system (systemd or Upstart). The next section provides more details about using your init system in general, but setting NODE_ENV is so important for performance (and easy to do), that it’s highlighted here.

With Upstart, use the env keyword in your job file. For example:

# /etc/init/env.conf

env NODE_ENV=production

For more information, see the Upstart Intro, Cookbook and Best Practices.

With systemd, use the Environment directive in your unit file. For example:

# /etc/systemd/system/myservice.service

Environment=NODE_ENV=production

For more information, see Using Environment Variables In systemd Units.

Ensure your app automatically restarts

In production, you don’t want your application to be offline, ever. This means you need to make sure it restarts both if the app crashes and if the server itself crashes. Although you hope that neither of those events occurs, realistically you must account for both eventualities by:

- Using a process manager to restart the app (and Node) when it crashes.

- Using the init system provided by your OS to restart the process manager when the OS crashes. It’s also possible to use the init system without a process manager.

Node applications crash if they encounter an uncaught exception. The foremost thing you need to do is to ensure your app is well-tested and handles all exceptions (see handle exceptions properly for details). But as a fail-safe, put a mechanism in place to ensure that if and when your app crashes, it will automatically restart.

Use a process manager

In development, you started your app simply from the command line with node server.js or something similar. But doing this in production is a recipe for disaster. If the app crashes, it will be offline until you restart it. To ensure your app restarts if it crashes, use a process manager. A process manager is a “container” for applications that facilitates deployment, provides high availability, and enables you to manage the application at runtime.

In addition to restarting your app when it crashes, a process manager can enable you to:

- Gain insights into runtime performance and resource consumption.

- Modify settings dynamically to improve performance.

- Control clustering (StrongLoop PM and pm2).

The most popular process managers for Node are as follows:

For a feature-by-feature comparison of the three process managers, see http://strong-pm.io/compare/. For a more detailed introduction to all three, see Process managers for Express apps.

Using any of these process managers will suffice to keep your application up, even if it does crash from time to time.

However, StrongLoop PM has lots of features that specifically target production deployment. You can use it and the related StrongLoop tools to:

- Build and package your app locally, then deploy it securely to your production system.

- Automatically restart your app if it crashes for any reason.

- Manage your clusters remotely.

- View CPU profiles and heap snapshots to optimize performance and diagnose memory leaks.

- View performance metrics for your application.

- Easily scale to multiple hosts with integrated control for Nginx load balancer.

As explained below, when you install StrongLoop PM as an operating system service using your init system, it will automatically restart when the system restarts. Thus, it will keep your application processes and clusters alive forever.

Use an init system

The next layer of reliability is to ensure that your app restarts when the server restarts. Systems can still go down for a variety of reasons. To ensure that your app restarts if the server crashes, use the init system built into your OS. The two main init systems in use today are systemd and Upstart.

There are two ways to use init systems with your Express app:

- Run your app in a process manager, and install the process manager as a service with the init system. The process manager will restart your app when the app crashes, and the init system will restart the process manager when the OS restarts. This is the recommended approach.

- Run your app (and Node) directly with the init system. This is somewhat simpler, but you don’t get the additional advantages of using a process manager.

Systemd

Systemd is a Linux system and service manager. Most major Linux distributions have adopted systemd as their default init system.

A systemd service configuration file is called a unit file, with a filename ending in .service. Here’s an example unit file to manage a Node app directly. Replace the values enclosed in <angle brackets> for your system and app:

[Unit]

Description=<Awesome Express App>

[Service]

Type=simple

ExecStart=/usr/local/bin/node </projects/myapp/index.js>

WorkingDirectory=</projects/myapp>

User=nobody

Group=nogroup

# Environment variables:

Environment=NODE_ENV=production

# Allow many incoming connections

LimitNOFILE=infinity

# Allow core dumps for debugging

LimitCORE=infinity

StandardInput=null

StandardOutput=syslog

StandardError=syslog

Restart=always

[Install]

WantedBy=multi-user.target

For more information on systemd, see the systemd reference (man page).

StrongLoop PM as a systemd service

You can easily install StrongLoop Process Manager as a systemd service. After you do, when the server restarts, it will automatically restart StrongLoop PM, which will then restart all the apps it is managing.

To install StrongLoop PM as a systemd service:

$ sudo sl-pm-install --systemd

Then start the service with:

$ sudo /usr/bin/systemctl start strong-pm

For more information, see Setting up a production host (StrongLoop documentation).

Upstart

Upstart is a system tool available on many Linux distributions for starting tasks and services during system startup, stopping them during shutdown, and supervising them. You can configure your Express app or process manager as a service and then Upstart will automatically restart it when it crashes.

An Upstart service is defined in a job configuration file (also called a “job”) with filename ending in .conf. The following example shows how to create a job called “myapp” for an app named “myapp” with the main file located at /projects/myapp/index.js.

Create a file named myapp.conf at /etc/init/ with the following content (replace the bold text with values for your system and app):

# When to start the process

start on runlevel [2345]

# When to stop the process

stop on runlevel [016]

# Increase file descriptor limit to be able to handle more requests

limit nofile 50000 50000

# Use production mode

env NODE_ENV=production

# Run as www-data

setuid www-data

setgid www-data

# Run from inside the app dir

chdir /projects/myapp

# The process to start

exec /usr/local/bin/node /projects/myapp/index.js

# Restart the process if it is down

respawn

# Limit restart attempt to 10 times within 10 seconds

respawn limit 10 10

NOTE: This script requires Upstart 1.4 or newer, supported on Ubuntu 12.04-14.10.

Since the job is configured to run when the system starts, your app will be started along with the operating system, and automatically restarted if the app crashes or the system goes down.

Apart from automatically restarting the app, Upstart enables you to use these commands:

start myapp– Start the apprestart myapp– Restart the appstop myapp– Stop the app.

For more information on Upstart, see Upstart Intro, Cookbook and Best Practises.

StrongLoop PM as an Upstart service

You can easily install StrongLoop Process Manager as an Upstart service. After you do, when the server restarts, it will automatically restart StrongLoop PM, which will then restart all the apps it is managing.

To install StrongLoop PM as an Upstart 1.4 service:

$ sudo sl-pm-install

Then run the service with:

$ sudo /sbin/initctl start strong-pm

NOTE: On systems that don’t support Upstart 1.4, the commands are slightly different. See Setting up a production host (StrongLoop documentation) for more information.

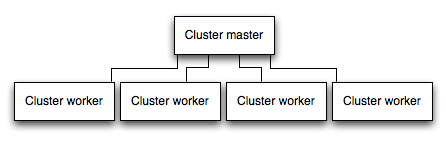

Run your app in a cluster

In a multi-core system, you can increase the performance of a Node app by many times by launching a cluster of processes. A cluster runs multiple instances of the app, ideally one instance on each CPU core, thereby distributing the load and tasks among the instances.

IMPORTANT: Since the app instances run as separate processes, they do not share the same memory space. That is, objects are local to each instance of the app. Therefore, you cannot maintain state in the application code. However, you can use an in-memory datastore like Redis to store session-related data and state. This caveat applies to essentially all forms of horizontal scaling, whether clustering with multiple processes or multiple physical servers.

In clustered apps, worker processes can crash individually without affecting the rest of the processes. Apart from performance advantages, failure isolation is another reason to run a cluster of app processes. Whenever a worker process crashes, always make sure to log the event and spawn a new process using cluster.fork().

Using Node’s cluster module

Clustering is made possible with Node’s cluster module. This enables a master process to spawn worker processes and distribute incoming connections among the workers. However, rather than using this module directly, it’s far better to use one of the many tools out there that does it for you automatically; for example node-pm or cluster-service.

Using StrongLoop PM

If you deploy your application to StrongLoop Process Manager (PM), then you can take advantage of clustering without modifying your application code.

When StrongLoop Process Manager (PM) runs an application, it automatically runs it in a cluster with a number of workers equal to the number of CPU cores on the system. You can manually change the number of worker processes in the cluster using the slc command line tool without stopping the app.

For example, assuming you’ve deployed your app to prod.foo.com and StrongLoop PM is listening on port 8701 (the default), then to set the cluster size to eight using slc:

$ slc ctl -C http://prod.foo.com:8701 set-size my-app 8

For more information on clustering with StrongLoop PM, see Clustering in StrongLoop documentation.

Using PM2

If you deploy your application with PM2, then you can take advantage of clustering without modifying your application code. You should ensure your application is stateless first, meaning no local data is stored in the process (such as sessions, websocket connections and the like).

When running an application with PM2, you can enable cluster mode to run it in a cluster with a number of instances of your choosing, such as the matching the number of available CPUs on the machine. You can manually change the number of processes in the cluster using the pm2 command line tool without stopping the app.

To enable cluster mode, start your application like so:

# Start 4 worker processes

$ pm2 start app.js -i 4

# Auto-detect number of available CPUs and start that many worker processes

$ pm2 start app.js -i max

This can also be configured within a PM2 process file (ecosystem.config.js or similar) by setting exec_mode to cluster and instances to the number of workers to start.

Once running, a given application with the name app can be scaled like so:

# Add 3 more workers

$ pm2 scale app +3

# Scale to a specific number of workers

$ pm2 scale app 2

For more information on clustering with PM2, see Cluster Mode in the PM2 documentation.

Cache request results

Another strategy to improve the performance in production is to cache the result of requests, so that your app does not repeat the operation to serve the same request repeatedly.

Use a caching server like Varnish or Nginx (see also Nginx Caching) to greatly improve the speed and performance of your app.

Use a load balancer

No matter how optimized an app is, a single instance can handle only a limited amount of load and traffic. One way to scale an app is to run multiple instances of it and distribute the traffic via a load balancer. Setting up a load balancer can improve your app’s performance and speed, and enable it to scale more than is possible with a single instance.

A load balancer is usually a reverse proxy that orchestrates traffic to and from multiple application instances and servers. You can easily set up a load balancer for your app by using Nginx or HAProxy.

With load balancing, you might have to ensure that requests that are associated with a particular session ID connect to the process that originated them. This is known as session affinity, or sticky sessions, and may be addressed by the suggestion above to use a data store such as Redis for session data (depending on your application). For a discussion, see Using multiple nodes.

Use a reverse proxy

A reverse proxy sits in front of a web app and performs supporting operations on the requests, apart from directing requests to the app. It can handle error pages, compression, caching, serving files, and load balancing among other things.

Handing over tasks that do not require knowledge of application state to a reverse proxy frees up Express to perform specialized application tasks. For this reason, it is recommended to run Express behind a reverse proxy like Nginx or HAProxy in production.